Okay, let’s face it: we are living in the absolute golden age of AI.

You know the drill. You log into a new SaaS tool, check out a documentation site, or even just open a help center, and there it is. That little "sparkle" icon. It’s everywhere.

Every product team seems to be racing to slap a "Chat with AI" button in the bottom right corner, promising us the moon.

The pitch is undeniably seductive: Why waste your time digging through search results when you can just ask a friendly, human-like assistant? 👀

It sounds great on paper.

But we recently stumbled upon a piece of data that suggests the reality is a little messier. In fact, it looks like we might be solving a problem that users (especially the technical ones) don't actually feel they have.

We ran a quick poll on LinkedIn recently, asking a super simple question: "How do you prefer to use a knowledge base?".

The options were pretty standard:

- Search (Good ol' Keywords/CMD+K)

- Browse (Clicking through menus)

- Ask an AI Assistant

The result? Nobody chose the AI assistant. Zero. Zilch. Nada.

In a tech landscape that is absolutely obsessed with Large Language Models (LLMs) and Generative AI, that silence is deafening. Folks are raising millions, while VCs pour billions.

Become an expert in all things Knowledge Base with our monthly newsletter. No spam, just expert content, delivered.

It’s a stark contrast to the roadmap of basically every product team out there right now. And it forces us to ask a tough, slightly uncomfortable question that's probably on all our minds right now: Are we building these features because they actually help people, or just because they’re cool tech? 🤔

To figure this out, we have to look past the hype cycle and get into the messy psychology of how we actually use computers, the economics of our attention span, and why trust is so hard to earn in a technical environment.

The Job to Be Done: Friction vs. Flow

So, why did the poll skew so heavily away from AI? The answer is probably buried in the core "Job to be Done."

Think about the last time you visited a knowledge base.

You probably weren't there to browse casually like you're wandering through a bookstore. You were likely there because you were stuck. You hit an error, you forgot a command, or you needed to configure some obscure setting. You were probably slightly annoyed being there in the first place.

You are in a state of negative friction. You just want to get un-stuck. Your goal is resolution, and you want it to be as fast and painless as possible so you can get back to your actual work.

The industry assumes that conversation is the most natural way to fix this. After all, asking a human expert is usually the gold standard, right?

But here’s the catch: an AI chatbot isn't a human expert. It’s a text-processing engine, and talking to it carries a hidden cost that we often ignore.

The Tax on Conversation

In User Experience (UX) design, there’s this concept called Interaction Cost. Basically, it’s the sum of all the mental and physical energy you have to spend to get what you want from a website.

Here's a neat video from the Nielsen Norman Group about this 👇

Here's the kicker: conversation is actually high-friction.

While "natural language" sounds easy, it imposes a heavy cognitive tax. Let’s compare the two ways you might try to solve a problem:

1. The Deterministic Path (Search & Browse) This is you hitting CMD+K and typing "API key" or "Where to find API key":

- Physical Effort: Almost zero. It’s muscle memory.

- Brain Power: Low. You just need the keyword.

- Feedback: Instant. You see a list: "Reset Password," "Reset API Key.". Or you get a summary that explains the basic concept from the articles.

- Result: You click, you read, you fix. It’s a straight line.

2. The Probabilistic Path (Chatbot) To use a chatbot, you have to switch gears. You have to stop "doing" and start "explaining."

- Formulation: You have to write a sentence: "How do I reset my API key in the dashboard?" You have to make sure you use the right words so the bot gets it.

- The "Waiting Game": You wait. Sure, modern AI is fast, but watching text stream onto a screen feels like an eternity compared to the instant snap of a search result.

- The Read: You have to parse a whole paragraph just to find that one line of code you needed.

- The Trust Check: You have to figure out if the AI actually understood you or if it’s just making stuff up (more on that in a second).

For a developer or a power user, the chat process feels like taking the scenic route when you're running late for a meeting. They don't want a conversation; they want the data.

The Headache of Prompt Engineering

There is a funny irony in the rise of AI. We promised it would make computers understand humans, but in practice, humans are forced to learn how to talk to computers.

We’re all becoming painfully aware that if we ask the AI a "bad" question, we get a "bad" answer. This creates what I like to call Prompt Anxiety.

- "Did I give it enough context?"

- "Should I paste the whole error log?"

- "Did I mention I'm on version 2.0?"

- "Was I nice enough to it and say please?"

In a traditional search bar if you type "error 500," you get a list of articles. If they look wrong, you just add a word: "error 500 gateway." You fix it in milliseconds.

In a chat interface, if the answer is wrong, you have to read a paragraph of apology. "I'm sorry, I didn't quite catch that...", and then re-type your whole query. The cost of failure in a chat is just way higher.

Trust Issues and the Black Box

Beyond the friction, there is the massive, looming issue of trust. When you browse a documentation site using the sidebar, you are building a mental map of the product.

You can see the structure:

- Getting Started

- Core Concepts

- Advanced Configuration

- API Reference

You know where you are. This context is huge for learning. You might stumble upon a related setting just because it was right next to the one you were looking for.

You absorb the scope of the tool just by looking at the menus.

An AI chatbot is a black box. You ask a question, and it spits out an answer from the void. You lose that peripheral vision. You have no idea if the answer came from the "Getting Started" guide (basic info) or the "Legacy API" docs (dangerous info).

You are flying blind, trusting a pilot you can't see.

The Hallucination Hangover

Let's be real: trust in AI is a bit shaky right now. Models are getting smarter, sure, but "hallucinations" (when the AI confidently lies to you) are still a plague, especially in tech.

And it doesn't help that AI is so agreeable.

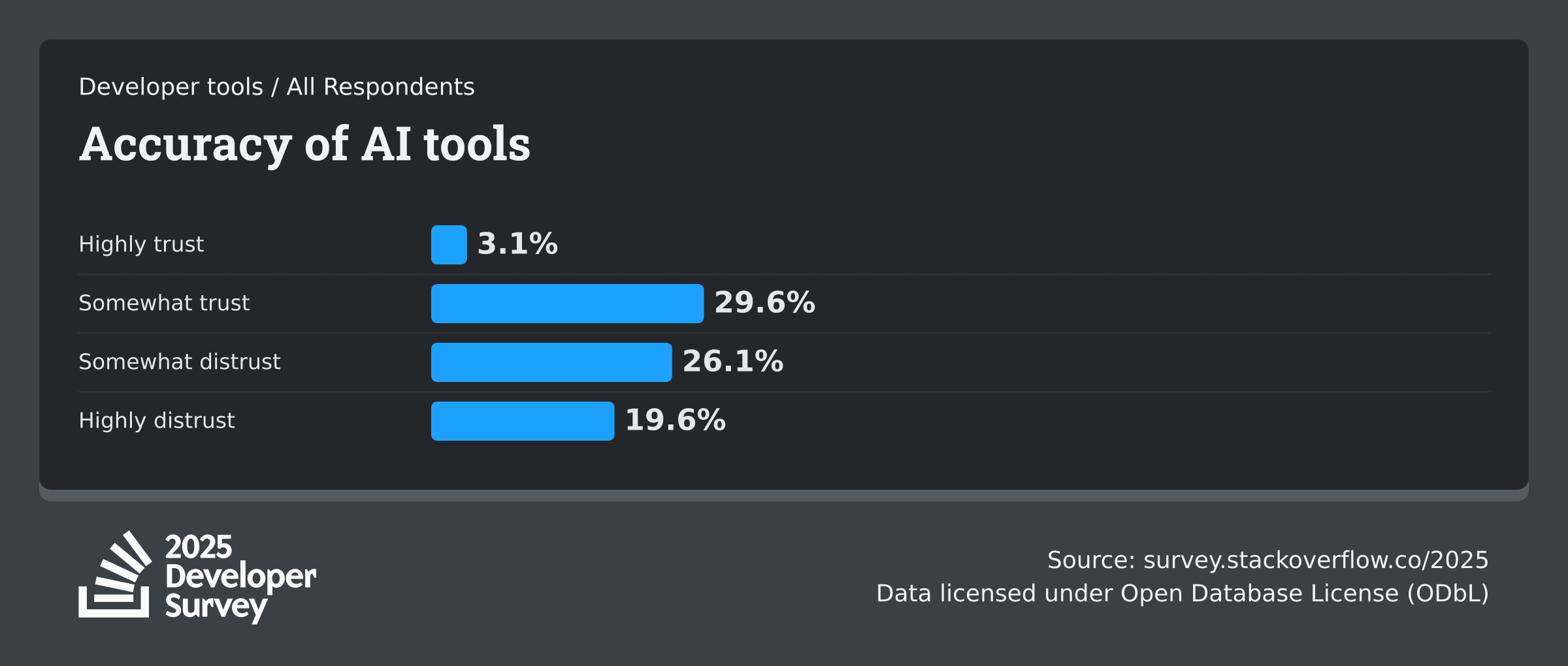

Recent stats back this up. The 2025 Stack Overflow Developer Survey highlighted a trend that shouldn't surprise anyone: High usage, low trust. While nearly 80% of developers are using AI tools, a tiny fraction actually highly trust what comes out of them. Most of us are stuck in a trust but verify loop.

Here’s the problem: If you have to verify every command the AI gives you by checking the official docs anyway, the AI has saved you zero time. Actually, it cost you time.

Imagine you ask: "How do I increase the memory limit?"

The AI confidently says: "Add memory_limit: 512 to your config.yaml file."

You try it. The app crashes. Turns out that parameter was killed off in version 2.0, but the AI was trained on data from version 1.5 ☹️

This is a support nightmare. Your documentation is supposed to be the "Source of Truth." If your AI interface waters down that truth with guesses, you aren't just annoying your users; you’re actively sabotaging them.

The Uncanny Valley of Support

You know the Uncanny Valley? It’s that creepy feeling you get when a robot looks almost human but not quite. Well, we’re seeing the same thing in customer support.

We’ve spent twenty years training users to ignore chatbots. For years, that little bubble in the corner meant:

- A dumb decision tree ("Press 1 for Sales").

- A blocker preventing you from emailing a real human.

- Generic articles you already found yourself.

Now, we’ve swapped those dumb bots for brilliant LLMs, but the interface is exactly the same. The user sees a chat bubble and their brain instantly goes, "Ugh, the Gatekeeper." They assume it’s going to be a hurdle, not a help.

Plus, when you're in troubleshooting mode, you usually want machine-like precision, not human-like empathy.

- Bad AI Experience:

- You: "Export to CSV fails on large datasets."

- AI: "I understand how frustrating it can be when your data export doesn't go as planned! Dealing with large datasets is often a challenge. Have you tried checking your internet connection?"

- Good Search Experience:

- You type: "Export CSV limit"

- Result: "Handling Large Exports: The default timeout is 30s. For datasets >10k rows, use the Async Export API."

The AI is trying to be nice, but you perceive it as noise. You don't want a friend; you want a fix.

When Does AI Actually Win?

So, is the AI assistant dead? Should we rip the chatbots out and go back to raw HTML lists?

No, definitely not.

The poll results don't mean AI is useless; they probably just mean it’s currently misplaced or maybe we're just prioritizing it too much. That "Zero votes" result isn't a rejection of AI; it's a rejection of AI as the primary interface.

To figure out where AI fits, we need to look at two types of users: The Hunter and The Gatherer.

1. The Hunter (Known Unknowns)

- The User: A dev who knows exactly what they need but forgot the syntax.

- The Query: "Python date format," "AWS S3 bucket policy example," "Reset admin password."

- The Best Tool: Search.

- Why: They have the keywords. They just need the destination. Putting a chatbot in front of this user is like putting a receptionist in front of a library shelf; just let them grab the book!

2. The Gatherer (Unknown Unknowns)

- The User: A beginner or someone facing a weird, complex error. They don't know what keywords to search for because they don't even understand the problem yet.

- The Query: "My app is crashing only on Tuesdays when the backup runs, and I see a memory spike."

- The Best Tool: AI.

- Why: Traditional search fails here. Keyword search for "Tuesday crash" will give you nothing. AI is great at synthesis. It can look at the symptoms, connect the dots between different docs (Backups, Memory Management, Cron Jobs), and give you a hypothesis.

The mistake we're making is forcing "Hunter" users into "Gatherer" interfaces. If I want to reset my password, I really don't want to have a conversation about it.

The Fix: Don't Bury the Content

The lesson here isn't that people hate AI. It's that people hate inefficiency.

Hiding your documentation behind a chat interface is a step backward. We are effectively taking the open web (browseable, linkable, scannable) and stuffing it back into a tiny terminal window.

The ideal knowledge base of the future isn't one that replaces navigation with a chatbot. It’s a Hybrid Model that respects where the user is at.

1. Search First, Chat Second

Don't hide the search bar. Make it the hero. Make sure your keyword search is lightning fast and can handle typos. If the user finds the article, you win. Only offer the Ask AI option if search fails or if the user asks for help connecting the dots.

2. Inline AI, Not "Chatbot" AI

Instead of a separate chat window that covers up your content, put the AI inside the content.

- Summarization: "TL;DR this page for me."

- Translation: "Show me this code snippet in Python instead of Java."

- Troubleshooting: "I'm getting an error running this specific command."

This keeps the user grounded in the "Source of Truth" (the docs) while using AI as a utility belt to make that truth more useful.

3. Transparency and Sourcing

If your AI answers a question, it has to show its work. Not just a tiny footnote, but a big link: "Sourced from: [Configuration Guide]." This lets the user verify the info instantly, rebuilding that trust that the "Black Box" destroys.

Respect the User's Intelligence

That 0% on our poll was a wake-up call. It’s a reminder that our users are busy, smart, and goal-oriented. They don't see our software as a friend to chat with; they see it as a tool to be mastered.

If your knowledge base forces users to tal" to a bot to get simple answers, you are adding friction, not removing it. You’re just putting a gatekeeper between the user and the manual.

The most human-centric thing you can do isn't to make your computer pretend to be a human. It's to design a system that respects the human's time.

So, listen to your users. Sometimes, they don't want a conversation. They just want the answer.